- Finest total free private CRM instrument: HubSpot

- Finest for visualizing private timelines: Pipedrive

- Finest private CRM for process and time monitoring: monday CRM

- Finest for designing private venture databases: Notion

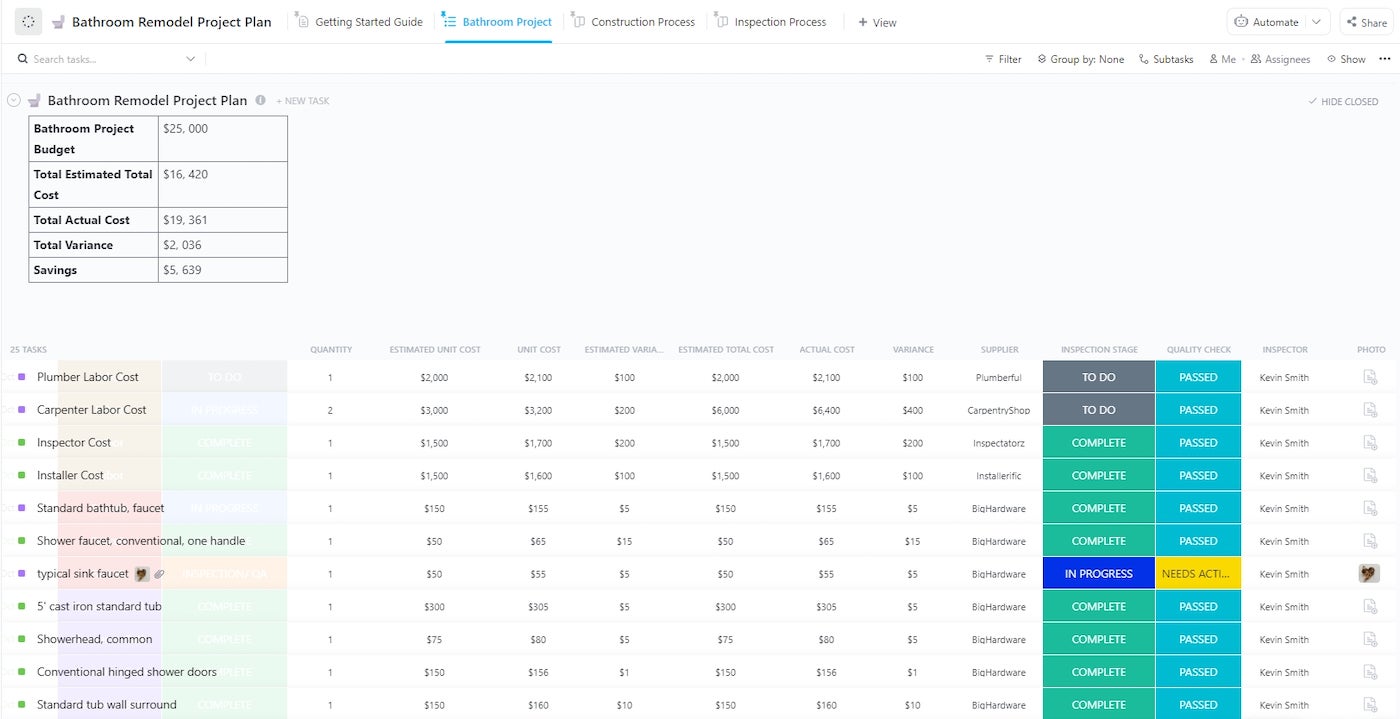

- Finest customizable private CRM: ClickUp

Private buyer relationship administration (CRM) software program helps particular person customers set up, handle, and observe their contacts, schedules, and communications. These platforms possess options like a centralized contact database, calendar integrations, cadence reminders, and assembly scheduling.

The most effective private CRM gives these options totally free or at an reasonably priced value. Platforms like HubSpot, Notion, and ClickUp have free choices with sturdy CRM, contact administration, and customization options. Pipedrive and monday CRM, however, work greatest for people looking for a extra primary resolution.

High private CRM software program comparability

The highest private CRMs let you group contacts, sync the system along with your calendar, and set cadence reminders for duties and appointments. Consequently, you may simply preserve private relationships, keep in mind vital occasions, and reconnect along with your contacts on the proper time.

Options

- Contact administration: Handle your private contacts, hyperlink associated information, and fix notes to every contact file.

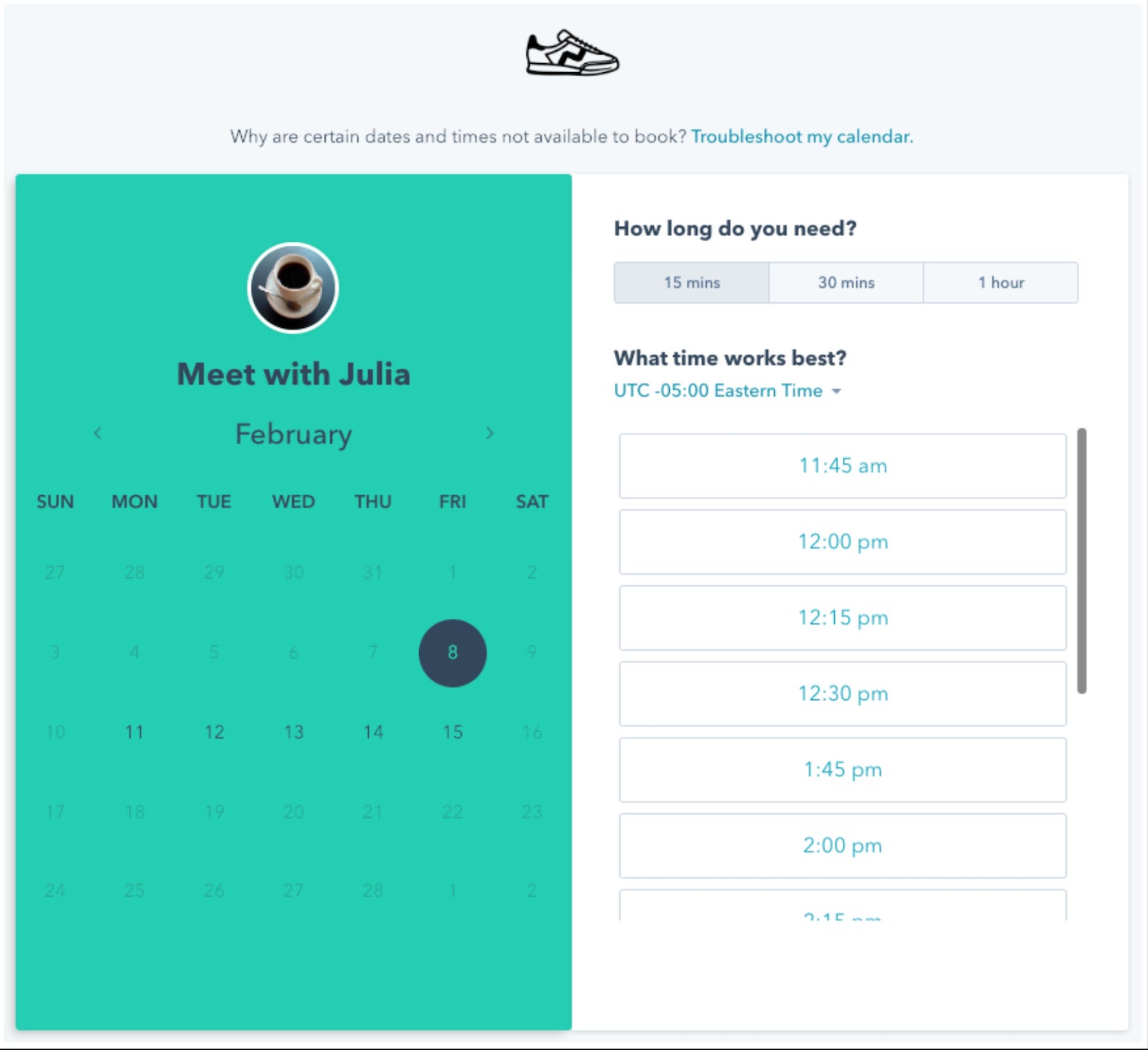

- Assembly scheduler: Robotically ebook conferences along with your private contacts and sync appointment schedules along with your Google or Workplace 365 calendar.

- Multichannel communications: HubSpot CRM means that you can attain out to your contacts by way of e mail, Fb Messenger, Slack, and cellphone.

Professionals and cons

| Professionals | Cons |

|---|---|

|

|

|

Pipedrive: Finest for visualizing private timelines

Total score: 4.67/5

Pricing: 4.38/5

Common options: 4.72/5

Ease of use: 5/5

Help: 4.38/5

Professional rating: 4.19/5

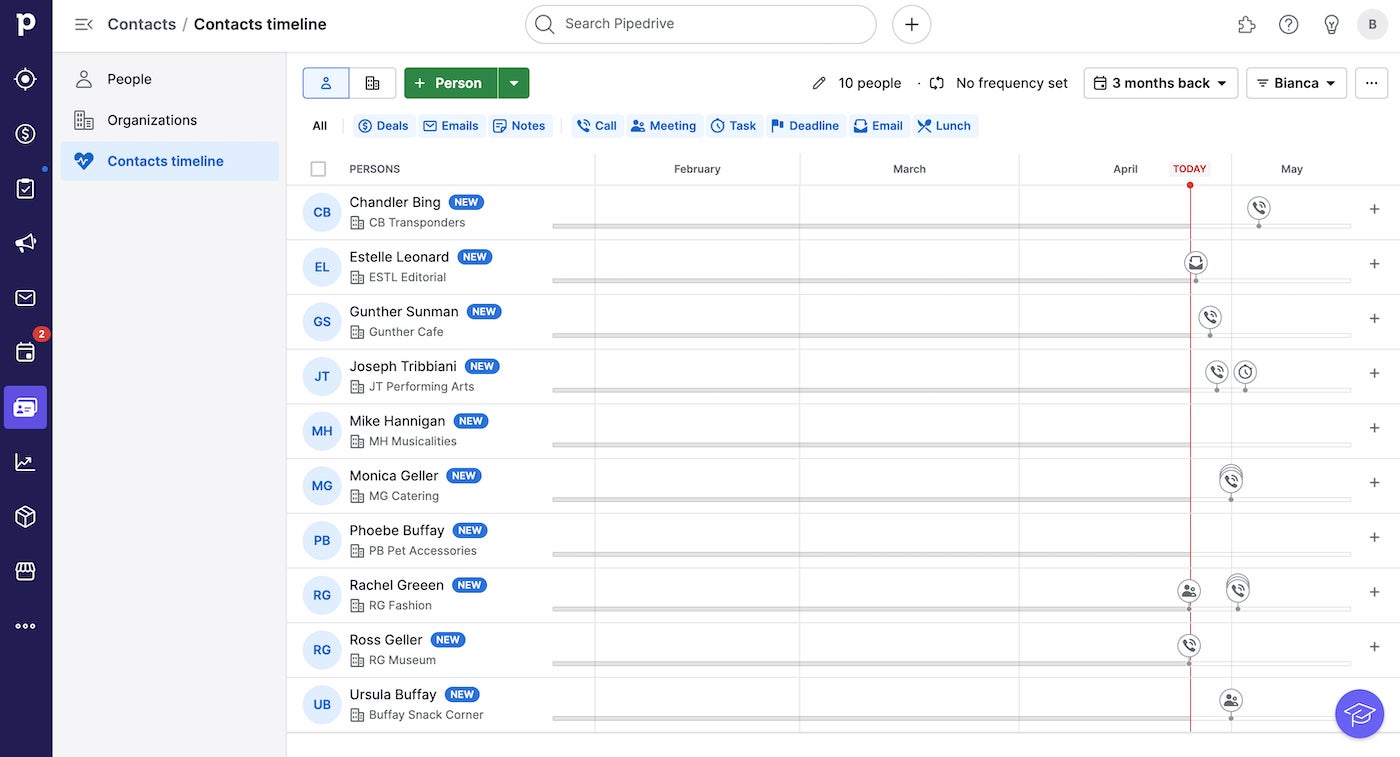

Pipedrive is a sales-focused CRM that people can leverage to prepare and develop their private networks. It means that you can sync your deal with ebook, e mail, and calendar so you may simply preserve observe of assembly cadences. Along with its sturdy contact administration options, it consists of systematized to-do lists, collated note-taking options, and appointment reminders.

SEE: 10 Finest CRM Software program for 2025

Why I selected Pipedrive

Pipedrive’s primary CRM options and easy interface make contact group and exercise monitoring a breeze. Its drag-and-drop performance makes it straightforward for customers to customise visible contact timelines. Plus, it means that you can automate follow-up emails and recurring reminders for appointments, day by day duties, and private regimes. Nonetheless, to entry its doc and venture administration instruments, you could pay add-on charges or subscribe to increased tiers.

Pipedrive is a superb possibility in the event you’re on the lookout for a easy private CRM, however some superior instruments are costlier than these provided by different suppliers. If you’d like extra reasonably priced appointment scheduling, calendar sync, and doc administration instruments, attempt HubSpot CRM.

For extra, head over to my detailed Pipedrive assessment.

Pricing

- Important: $14/person/month, billed yearly, or $24 when billed month-to-month. This consists of customizable visible pipelines and dashboards, contact administration, file attachments, and exercise reminder notifications.

- Superior: $39/person/month, billed yearly, or $49 when billed month-to-month. This consists of every part within the Important plan, plus two-way e mail sync, customizable e mail templates, e mail scheduling, and automation.

- Skilled: $49/person/month, billed yearly, or $69 when billed month-to-month. This consists of every part within the Superior plan, plus a number of e mail accounts sync, doc administration, and limitless scheduling hyperlinks.

- Energy: $64/person/month, billed yearly, or $79 when billed month-to-month. This consists of every part within the Skilled plan, plus venture administration and cellphone help.Enterprise: $99/person/month, billed yearly, or $129 when billed month-to-month. This consists of every part within the Energy plan, plus safety settings and the very best function limits.

Options

- Visible contacts timeline: Pipedrive helps you to drag and drop reminders, occasions, conferences, and targets into a visible contacts timeline for simpler process group.

- Assembly scheduler: Use Pipedrive’s scheduling instrument to sync your calendars, share your availability, and schedule emails, video calls, and appointments.

- Good Docs: Centralize your doc administration course of, ship trackable recordsdata, auto-fill paperwork with information from Pipedrive information, and signal paperwork electronically.

Professionals and cons

| Professionals | Cons |

|---|---|

|

|

|

monday CRM: Finest private CRM for process and time monitoring

Total score: 4.46/5

Pricing: 3.88/5

Common options: 4.61/5

Ease of use: 4.5/5

Help: 4.69/5

Professional rating: 4.25/5

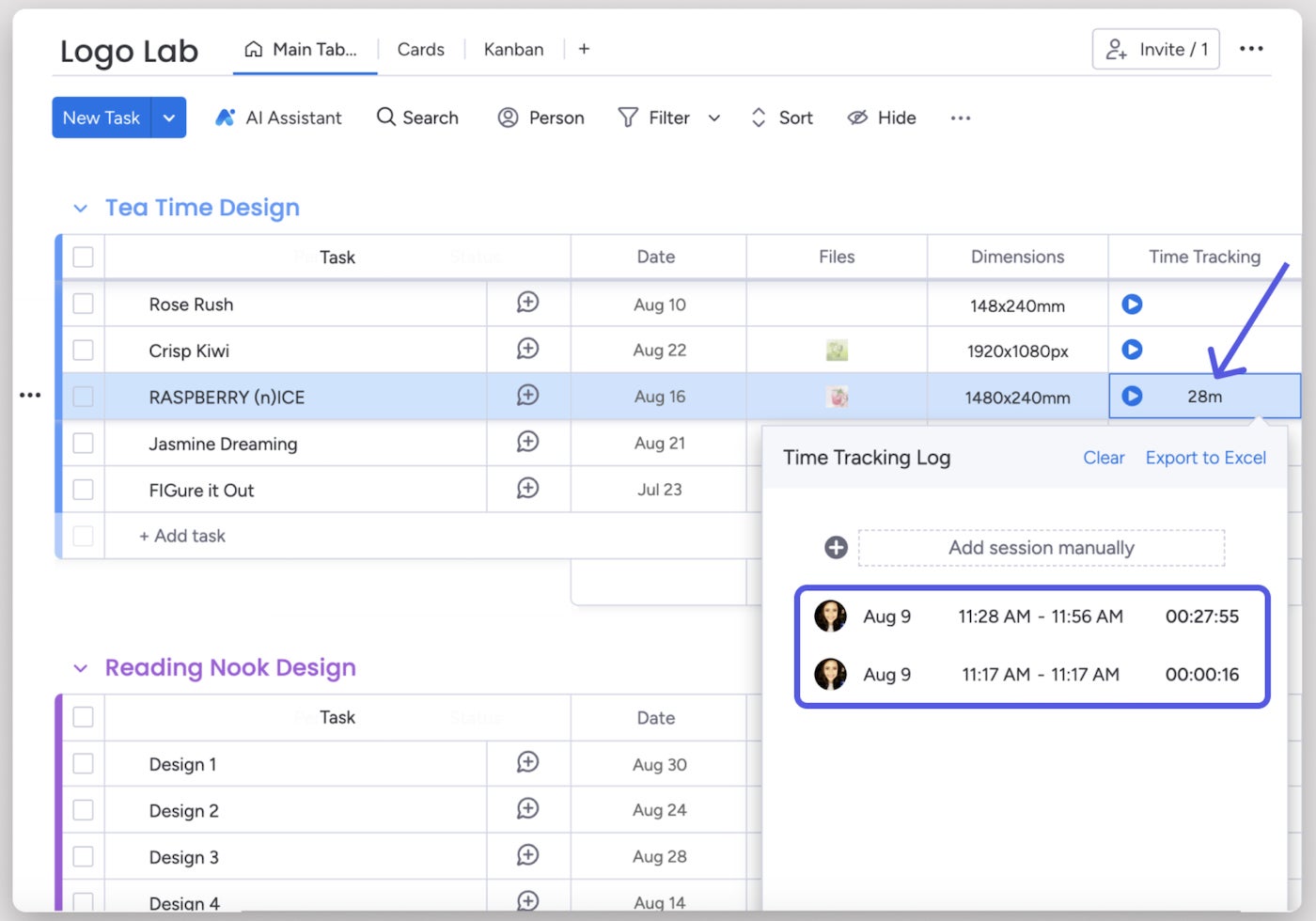

monday CRM is a extremely customizable platform that allows customers to handle private contacts and duties from a visible pipeline. It additionally gives options for logging notes and concepts, reminiscent of digital whiteboards and embedded recordsdata. Its intuitive interface and no-code automation make it straightforward for anybody to be taught, navigate, and use.

Why I selected monday CRM

monday CRM gives over 200 templates you may customise to your private use, together with these for contact, process, or venture administration. You should utilize its dashboards as a pipeline for private duties, targets, and appointments. It additionally has a singular column for logging the time to finish every process. Furthermore, you may obtain automated reminders for every merchandise in your pipeline to make sure that nothing slips by the cracks.

Whereas monday CRM is an intuitive instrument for managing duties, it comes with a three-user minimal requirement that would make it extra pricey than different suppliers. If you’d like a less expensive various for particular person customers, attempt HubSpot CRM or ClickUp.

Try our monday CRM assessment to be taught extra.

Pricing*

- Primary: $12/person/month, billed yearly, or $15 when billed month-to-month. This consists of limitless contacts, 5GB file storage, templates for contact administration, and exercise logs.

- Commonplace: $17/person/month, billed yearly, or $20 when billed month-to-month. This consists of every part within the Primary plan, plus 20GB of file storage, AI instruments, two-way e mail integration, and automations.

- Professional: $28/person/month, billed yearly, or $33 when billed month-to-month. This consists of every part within the Commonplace plan, plus 100GB file storage, e mail templates, calendar integration, and process time monitoring.

- Enterprise: Contact the supplier for a customized quote. This plan consists of every part within the Professional plan, plus 1,000GB file storage, duplicate information warning, content material listing, and shopper initiatives.

*Requires a minimal of three customers.

Options

- Limitless contact database: Retailer limitless contacts in monday CRM’s database and log actions, duties, calls, and conferences related to every contact file.

- Automation middle: Construct workflows and automate reminders for process deadlines, emails, and appointments utilizing monday CRM’s ready-made automations.

- Monday AI: Leverage AI to generate and edit e mail messages, replies, topic traces, and e mail templates to avoid wasting effort and time.

Professionals and cons

| Professionals | Cons |

|---|---|

|

|

|

Notion: Finest for designing private venture databases

Total score: 4.43/5

Pricing: 4.5/5

Common options: 4.56/5

Ease of use: 4.6/5

Help: 3.63/5

Professional rating: 3.81/5

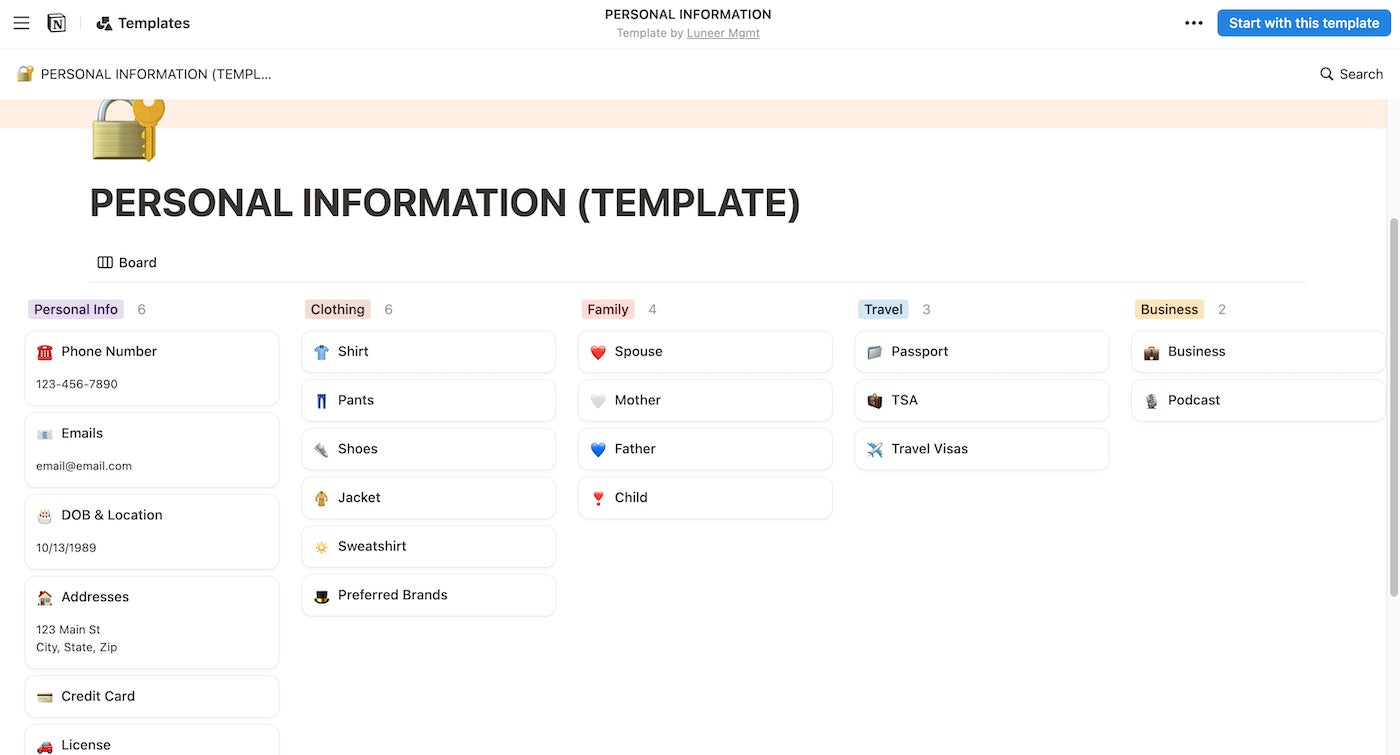

Notion is a venture workspace that freelancers and people can customise into a private CRM. It gives over 100 templates for monitoring private info, enterprise information, and venture timelines. This platform additionally has a number of information viewing choices, together with timelines, boards, and calendars. Its different options embrace to-do lists, notes, process monitoring, purpose monitoring, and a web site builder.

Why I selected Notion

With Notion, you may entry a strong set of venture and process administration instruments, together with a centralized database for venture documentation and data. You should utilize its workspace to trace and retailer numerous private information, together with notes, trip particulars, journal entries, and job purposes. Plus, its drag-and-drop performance makes organizing information blocks and constructing workflows fast and straightforward.

Out of all of the suppliers on this record, Notion is the one that’s tailor-made for private use. Nonetheless, it gives restricted built-in communication and AI capabilities. If you’d like a platform that comes out of the field with these options, subscribe to HubSpot CRM or Pipedrive.

Pricing

- Free: $0 for one person with limitless pages and blocks, 5MB file uploads, Notion Calendar, subtasks, and primary automations.

- Plus: $10/person/month, billed yearly, or $12 when billed month-to-month. This consists of every part within the Free plan, plus customized automation and limitless file add, charts, and synced databases.

- Enterprise: $15/person/month, billed yearly, or $18 when billed month-to-month. This consists of every part within the Plus plan, plus single sign-on and the power to export workspaces as PDFs.

- Enterprise: Contact the supplier for a customized quote. This consists of every part within the Marketing strategy, plus superior workspace safety controls and admin content material search.

Options

- Wikis: Centralize all of your data and documentation in a single organized repository with options for syncing updates and linking associated paperwork.

- Undertaking workspaces: Customise Notion’s linked workspaces and use them for managing initiatives, duties, checklists, timelines, and databases.

- Notion AI: Use Notion’s AI instruments to energy database searches, generate paperwork, get insights from PDFs and pictures, and entry GPT-4 and Claude.

Professionals and cons

| Professionals | Cons |

|---|---|

|

|

|

ClickUp: Finest customizable private CRM

Total score: 4.37/5

Pricing: 4.5/5

Common options: 4.72/5

Ease of use: 3/5

Help: 5/5

Professional rating: 4.13/5

ClickUp is a whole work administration system you may customise to suit any private or enterprise want. This platform is constructed on centralized workspaces that you should use to handle something from mundane day-to-day duties to large-scale initiatives. It additionally gives over 70 templates for numerous private makes use of, together with contact administration, day by day motion plans, bucket lists, vacation planners, journey itineraries, and residential initiatives.

SEE: 5 Finest CRM With Undertaking Administration

Why I selected ClickUp

ClickUp gives deep customizations for its workspaces, information views, process labels, dashboards, and reminders. It additionally gives limitless duties, file storage, customized views, and automation. You may make your workspace as easy or advanced as you need. As well as, it has free and cost-scalable plans, making it preferrred for particular person customers or freelancers on the lookout for an reasonably priced private CRM.

ClickUp shouldn’t be a conventional CRM, and its a number of customization choices would possibly drawback customers preferring a less complicated platform. Strive HubSpot CRM or Pipedrive if you’d like a general-use CRM with little to no studying curve.

Need to know extra? Learn our full ClickUp assessment.

Pricing

- Free Without end: $0 for limitless customers, with 100MB storage, whiteboards, docs, automations, and limitless duties and customized views.

- Limitless: $7/person/month, billed yearly, or $10 when billed month-to-month. This consists of every part within the Free Without end plan, plus e mail integration, time monitoring, targets, and limitless storage, integrations, and dashboards.

- Enterprise: $12/person/month, billed yearly ($19/person/month, billed month-to-month). This consists of every part within the Limitless plan, plus Google single sign-on, superior automations, timelines, thoughts maps, and granular time estimates.

- Enterprise: Contact the supplier for a customized quote. This consists of every part within the Marketing strategy, plus white labeling, workforce sharing for workspaces, and superior customizations and permissions.

Options

- Customizable views: Visualize your day by day, weekly, or month-to-month duties utilizing ClickUp’s customizable record, board, and calendar views.

- Pre-built automations: Save time by automating routine duties utilizing pre-built or personalized workflows and process statuses.

- Efficiency monitoring: Observe and analyze your progress throughout private initiatives, cash earned, and time spent on duties utilizing ClickUp’s productiveness dashboard widgets.

Professionals and cons

| Professionals | Cons |

|---|---|

|

|

|

What’s a private CRM?

A private buyer relationship administration software program is a platform that lets people, solopreneurs, and freelancers preserve relationships with their networks. It gives helpful options like contact administration, a contact database, exercise monitoring, assembly scheduling, cadence reminders, and note-taking. These key options assist customers keep on high of their day-to-day duties and preserve communication with private contacts.

Advantages of private CRM instruments

Generally, you neglect to return a name or a message, fail to ship greetings on an important day, and even miss conferences regardless of having cellphone reminders. With the assistance of private CRM apps, you may make sure you don’t miss vital milestones and appointments. There are 4 fast advantages of utilizing a private CRM app.

- Organized private contacts: With a CRM, you may preserve all of your contact information in a central database.

- Streamlined communication: Set reminders for a gathering or automate emails and different communications to remain linked promptly.

- Higher work-life steadiness: Sync your private CRM along with your calendar to remain on high of vital dates, occasions, and events.

- Improved private relationships: Keep linked with your folks, household, and colleagues, and ship them well timed greetings and congratulatory messages.

SEE: 8 Advantages of CRM Software program for Companies

Key options to search for in a private CRM software program

When selecting a private CRM, search for one that matches your function and price range wants. Decide in the event you want it for easy contact administration or extra advanced venture monitoring. Think about three standout options as you search for a private CRM.

- Contact administration: Retailer, set up, and observe all of your private contacts in a central database that’s straightforward to entry.

- Calendar integration: Join your CRM to your calendar to automate assembly scheduling, appointment reminders, and vital process deadlines.

- Multichannel communication: Ship emails, textual content messages, and social media messages to your contacts instantly out of your CRM.

The right way to choose the correct private CRM

Earlier than committing to a private CRM, it’s vital to analysis the software program’s options and capabilities to grasp its preferrred use instances. That method, you may make sure the supplier can deal with your CRM function wants. I like to recommend asking the next questions so as to choose the perfect private CRM for you:

- Does this CRM instrument match into my day by day life and wishes?

- Does this CRM supply instruments for managing my contacts and appointments?

- Does this software program supply a free model with all the fundamental options I want?

- Does this software program have scalable CRM pricing plans that match my price range?

- Can I combine this instrument with my e mail shopper, calendar, and communication apps?

- How straightforward is that this software program to be taught, navigate, use, and implement?

- What do precise customers say about this software program on respected assessment websites?

Methodology

I used an in-house rubric to seek out and rank the highest 5 private CRM options. I scored these suppliers primarily based on outlined standards and subcategories for anticipated trade requirements. As well as, I referenced every platform’s on-line assets and person suggestions to find out its ease of use and high quality of buyer help. Lastly, I drew from my very own analysis and trial expertise with the software program for my skilled rating.

Assessment my scoring standards for extra insights.

- Pricing: Weighted 25% of the full rating.

- Common options: Weighted 30% of the full rating.

- Ease of use: Weighted 20% of the full rating.

- Buyer help: Weighted 13% of the full rating.

- Professional rating: Weighted 12% of the full rating.

Continuously requested questions

What’s the greatest CRM for a person?

The most effective CRM for a person varies relying on the person’s price range, function wants, technical talent, and private preferences. For instance, in the event you’re on the lookout for a free and full CRM, HubSpot could be your best choice. If you’d like a non-traditional CRM to trace your private productiveness, contemplate ClickUp or Notion.

Can you employ Google as a CRM?

No, you can not use Google as a CRM software program. Nonetheless, you should use Google Workspace productiveness apps like Gmail, Contacts, Calendar, and Sheets as primary instruments for managing your contacts and buyer relationships.

Can I create my very own CRM with Excel?

Sure, you may create a easy CRM system utilizing Excel. Though the primary goal of this instrument shouldn’t be CRM, you may retailer and set up your buyer information and interactions right here. Nonetheless, you would possibly have to transition to a conventional CRM system when you scale up your enterprise operations to satisfy extra superior function wants.

No Comment! Be the first one.