The newest outcomes from FrontierMath, a benchmark check for generative AI on superior math issues, present OpenAI’s o3 mannequin carried out worse than OpenAI initially acknowledged. Whereas newer OpenAI fashions now outperform o3, the discrepancy highlights the necessity to scrutinize AI benchmarks carefully.

Epoch AI, the analysis institute that created and administers the check, launched its newest findings on April 18.

OpenAI claimed 25% completion of the check in December

Final yr, the FrontierMath rating for OpenAI o3 was a part of the almost overwhelming variety of bulletins and promotions launched as a part of OpenAI’s 12-day vacation occasion. The corporate claimed OpenAI o3, then its strongest reasoning mannequin, had solved greater than 25% of issues on FrontierMath. As compared, most rival AI fashions scored round 2%, in accordance with TechCrunch.

SEE: For Earth Day, organizations might issue generative AI’s energy into their sustainability efforts.

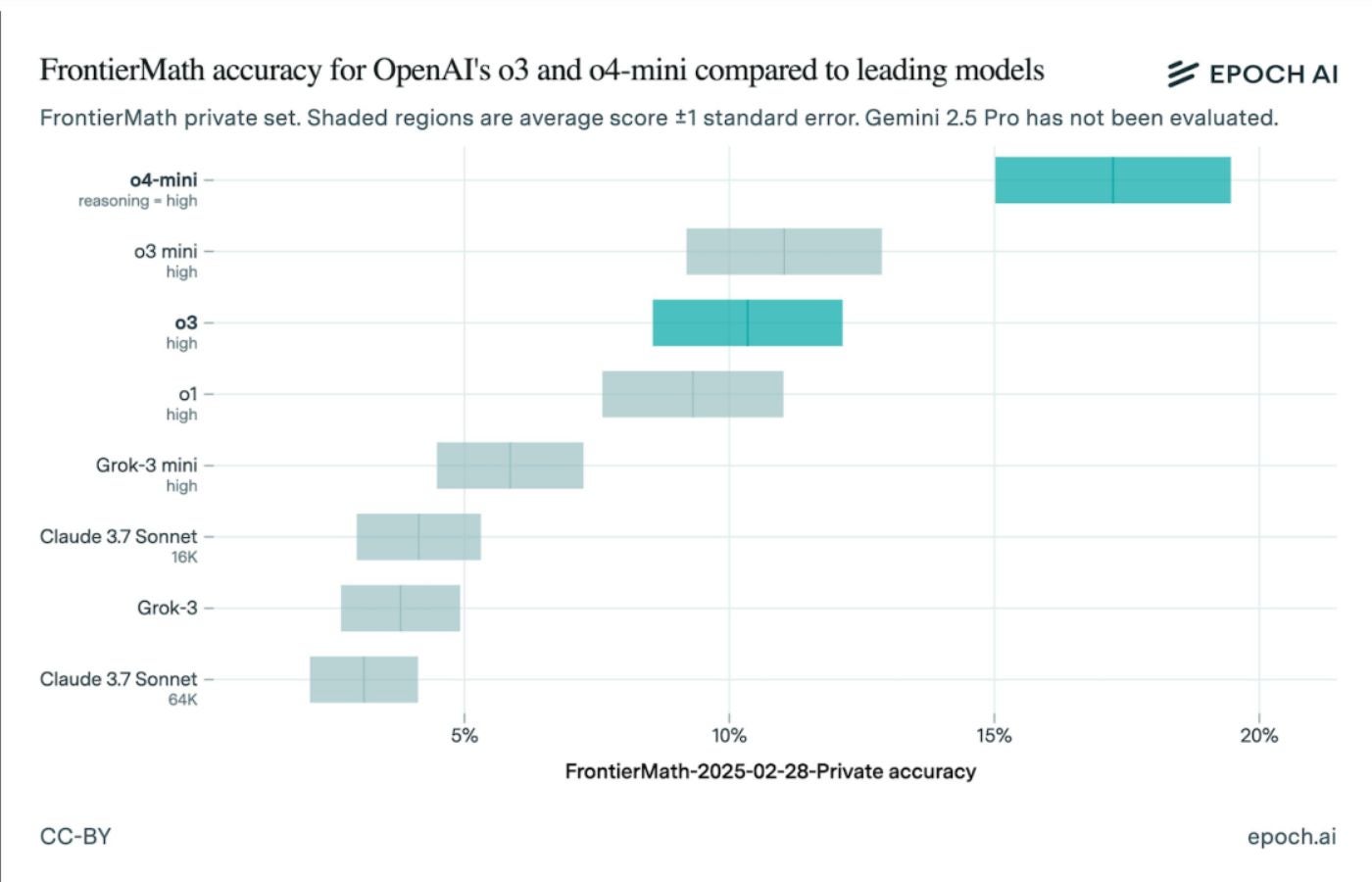

On April 18, Epoch AI launched check outcomes exhibiting OpenAI o3 scored nearer to 10%. So, why is there such a giant distinction? Each the mannequin and the check might have been totally different again in December. The model of OpenAI o3 that had been submitted for benchmarking final yr was a prerelease model. FrontierMath itself has modified since December, with a special variety of math issues. This isn’t essentially a reminder to not belief benchmarks; as an alternative, simply keep in mind to dig into the model numbers.

OpenAI o4 and o3 mini rating highest on new FrontierMath outcomes

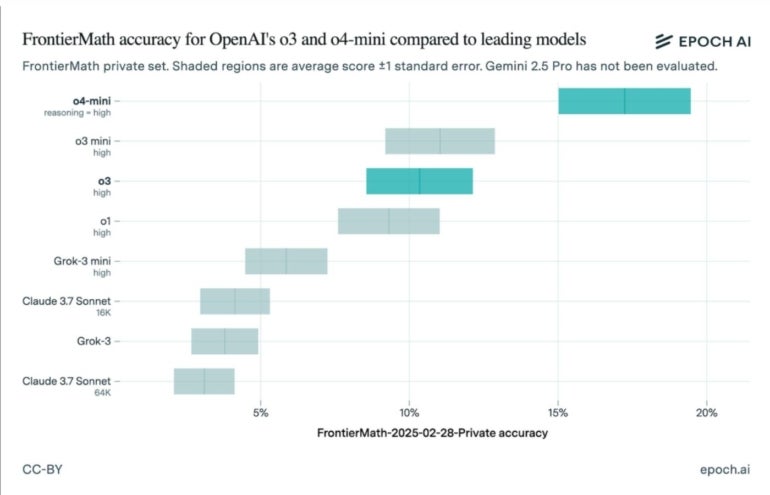

The up to date outcomes present OpenAI o4 with reasoning carried out finest, scoring between 15% and 19%. It was adopted by OpenAI o3 mini, with o3 in third. Different rankings embody:

- OpenAI o1

- Grok-3 mini

- Claude 3.7 Sonnet (16K)

- Grok-3

- Claude 3.7 Sonnet (64K)

Though Epoch AI independently administers the check, OpenAI initially commissioned FrontierMath and owns its content material.

Criticisms of AI benchmarking

Benchmarks are a typical solution to examine generative AI fashions, however critics say the outcomes will be influenced by check design or lack of transparency. A July 2024 research raised considerations that benchmarks typically overemphasize slim job accuracy and undergo from non-standradized analysis practices.

No Comment! Be the first one.