NVIDIA published quite a lot of infrastructure, {hardware}, and sources for medical analysis and endeavor on the International Conference for High Performance Computing, Networking, Storage, and Analysis, held Nov. 17 to Nov. 22 in Atlanta. Key amongst those bulletins used to be the impending common availability of the H200 NVL AI accelerator.

The latest Hopper chip is coming in December

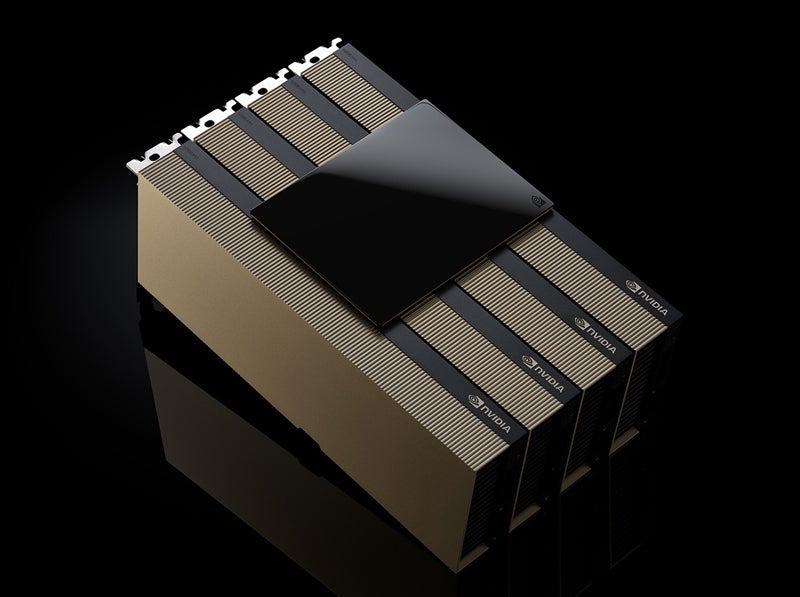

NVIDIA introduced at a media briefing on Nov. 14 that platforms constructed with the H200 NVL PCIe GPU shall be to be had in December 2024. Enterprise shoppers can consult with an Enterprise Reference Architecture for the H200 NVL. Purchasing the brand new GPU at an endeavor scale will include a five-year subscription for the NVIDIA AI Enterprise carrier.

Dion Harris, NVIDIA’s director of speeded up computing, mentioned on the briefing that the H200 NVL is perfect for information facilities with decrease continual — below 20kW — and air-cooled accelerator rack designs.

“Companies can fine-tune LLMs within a few hours” with the impending GPU, Harris mentioned.

H200 NVL presentations a 1.5x reminiscence building up and 1.2x bandwidth building up over NVIDIA H100 NVL, the corporate mentioned.

Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro will strengthen the brand new PCIe GPU. It can even seem in platforms from Aivres, ASRock Rack, GIGABYTE, Inventec, MSI, Pegatron, QCT, Wistron, and Wiwynn.

SEE: Companies like Apple are running arduous to create a staff of chip makers.

Grace Blackwell chip rollout continuing

Harris additionally emphasised that companions and distributors have the NV GB200 NVL4 (Grace Blackwell) chip in hand.

“The rollout of Blackwell is proceeding smoothly,” he mentioned.

Blackwell chips are offered out via the following yr.

Unveiling the Next Phase of Real-Time Omniverse Simulations

In production, NVIDIA presented the Omniverse Blueprint for Real-Time CAE Digital Twins, now in early get right of entry to. This new reference pipeline presentations how researchers or organizations can boost up simulations and real-time visualizations, together with real-time digital wind tunnel trying out.

Built on NVIDIA NIM AI microservices, Omniverse Blueprint for Real-Time CAE Digital Twins we could simulations that typically take weeks or months be carried out in genuine time. This capacity shall be on show at SC’24, the place Luminary Cloud will display how it may be leveraged in a fluid dynamics simulation.

“We built Omniverse so that everything can have a digital twin,” Jensen Huang, founder and CEO of NVIDIA, mentioned in a press liberate.

“By integrating NVIDIA Omniverse Blueprint with Ansys software, we’re enabling our customers to tackle increasingly complex and detailed simulations more quickly and accurately,” mentioned Ajei Gopal, president and CEO of Ansys, in the similar press liberate.

CUDA-X library updates boost up medical analysis

NVIDIA’s CUDA-X libraries lend a hand boost up the real-time simulations. These libraries also are receiving updates focused on medical analysis, together with adjustments to CUDA-Q and the discharge of a brand new model of cuPyNumeric.

Dynamics simulation capability shall be incorporated in CUDA-Q, NVIDIA’s construction platform for development quantum computer systems. The function is to accomplish quantum simulations in sensible occasions — similar to an hour as a substitute of a yr. Google works with NVIDIA to construct representations in their qubits the usage of CUDA-Q, “bringing them closer to the goal of achieving practical, large-scale quantum computing,” Harris mentioned.

NVIDIA additionally introduced the most recent cuPyNumeric model, the speeded up medical analysis computing library. Designed for medical settings that steadily use NumPy methods and run on a CPU-only node, cuPyNumeric we could the ones tasks scale to 1000’s of GPUs with minimum code adjustments. It is lately being utilized in choose analysis establishments.

No Comment! Be the first one.